It all started with a simple question: “How much is verification going to cost?”

This is an important question since verification is a major part of System-on-Chip development efforts – 56% according to a recent study (the 2012 Wilson Research Group study sponsored by Mentor Graphics). The same study shows that verification engineers spend 35% of their time in debug.

Understanding the cost of bugs seems like a good place to start looking for answers.

The Power ot Ten

The table below was presented at DAC 2004 as part of a verification panel:

This table expresses the consensus notion that bugs cost more to fix later in the design process, but the ten times multiplier for very different development stages seems unlikely. Similar estimates are common in industry literature (especially in vendor marketing presentations) but there don’t appear to be any public studies supporting these ratios.

If we knew the actual multipliers for each stage, it would allow us to make more reasoned decisions about tool and methodology changes to reduce verification cost.

The chart above and similar estimates for hardware development appear to have taken their inspiration from software metrics. This seems reasonable – with synthesizable hardware description languages, behavioral testbenches, and increasing use of software models, hardware design projects are looking more and more like large, complex software development projects.

Let’s see if we can gain some insight from the world of software engineering.

Software cost-to-fix defects

A quick search of the software engineering literature turns up many charts illustrating the idea that defects become exponentially more expensive to fix in later design phases. Often they show the same power of ten per development phase multiplier we found in hardware development literature. For example:

Often the idea is expressed in a less whimsical but no more accurate chart or table and used to make serious arguments for process improvements ranging from more code reviews to agile methods. What is missing from nearly all of these charts and tables are any description of what is actually being counted as the cost of fixing the defects, and in what phase the defects were introduced.

Software Metrics Archaeology

If you trace through the tangle of references and attempt to find original sources as Graham Lee did in this blog post, you eventually end up with what appears to be the most substantial source – this chart with the results of studies from the late 1970s:

Despite some confusion over what is actually being counted as cost and the studies having been conducted at a time when punch cards were the norm, these results live on in many forms. The data has been misinterpreted, generalized, and extrapolated first for new software development methodologies and now hardware development.

The original interpretation was that errors found in later phases of development are more expensive to fix since they have more documentation and other collateral that would have to be revised.

A more recent interpretation is that the longer a defect is latent in the design, the more expensive it is to fix. This is often illustrated with a simple exponential curve on a linear scale chart. The rationale here is apparently that the amount of rework increases exponentially with time.

Phase Containment

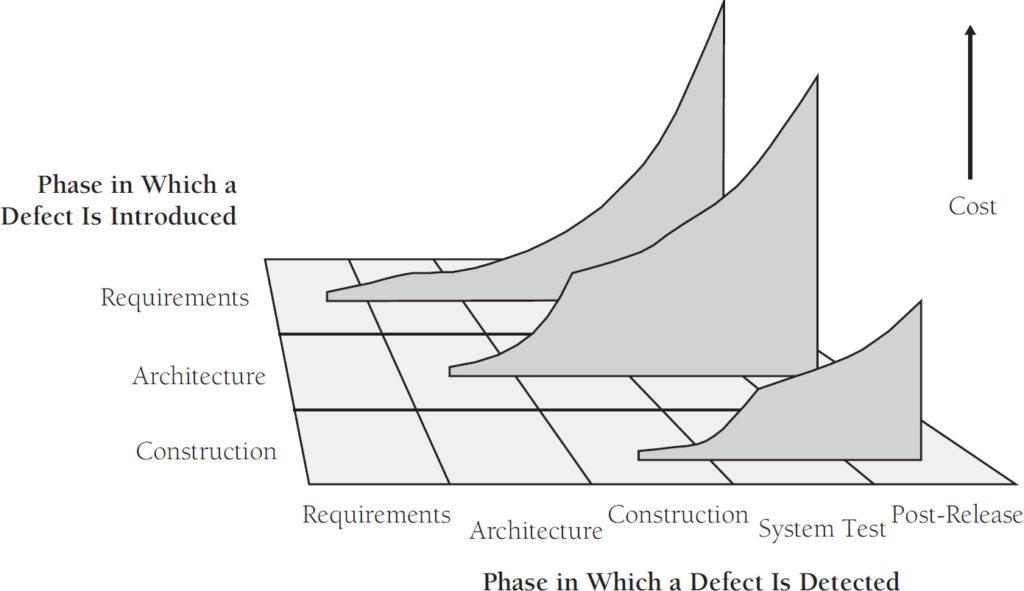

“Time latent in the design” was later refined to reflect the idea that a defect is cheapest to find and repair in the project phase in which it was introduced. For example:

This interpretation gave rise to the idea of “phase containment” of errors, with associated methodologies and metrics.

Phase containment makes sense in hardware development, because different stages of development (e.g., architecture, IP RTL, RTL integration, etc.) are often done by different teams and the costs of handling patch deliveries and debugging someone else’s design in a different environment would definitely affect the costs.

Is measuring cost per defect even a good idea?

Capers Jones argues that software cost-to-fix is not directly related to the phase itself, but rather to the number of bugs being found and fixed in that phase. The net effect, Jones argues, is to make quality improvements appear more expensive (overall costs amortized over fewer bugs leads to a higher apparent cost per bug).

Along the way he builds a simple cost model of the software development process and uses it to illustrate how different accounting of costs can lead to different conclusions. While there are differences between software and hardware development, this is the type of modeling we need in order to rationally evaluate tool and methodology changes for hardware development flows.

In Summary

In order to make important decisions about hardware design flow investments in tools and methodology, we are using 40 year old software development data from the era of programming with punch cards which has been misinterpreted, generalized, and extrapolated first for new software development methodologies and now hardware development.

Even though the supporting data for these charts is lacking, the trends depicted have some similarity to what we see on real projects. We just need a more accurate model of the hardware development process in order to make sure we are making the correct decisions.

The next post will describe a cost model that accounts for the various activities in the hardware development process and provides a basis for identifying and evaluating design flow improvements.

© Ken Albin and System Semantics, 2014. All Rights Reserved.